Beyond the Numbers: Why Test Coverage Alone Doesn’t Guarantee Quality

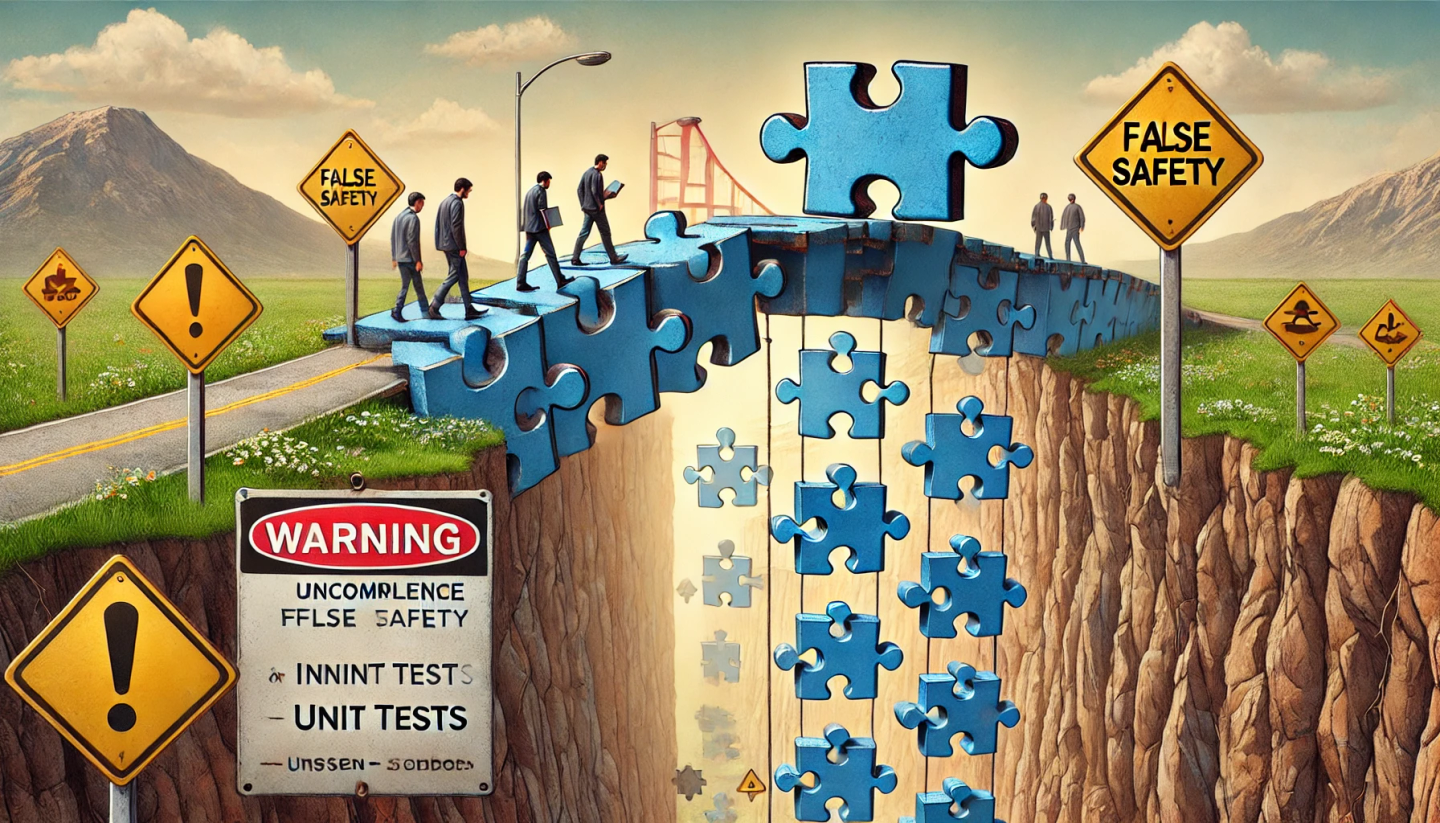

In the world of software development, few metrics receive as much attention as test coverage. It’s an easy number to understand: “X% of our code is covered by tests.” This figure, however, can often give a false sense of security. While coverage has value as a quick indicator, it says nothing about the quality or effectiveness of the tests themselves.

In fact, some teams obsess over hitting arbitrary coverage targets—like 80%, 90%, or even 100%—while neglecting a crucial part of the equation: Are the tests actually validating that the code does what it’s supposed to do?

The Problem With “Check-the-Box” Tests

When a coverage requirement becomes a quota, it can tempt developers to write tests whose sole purpose is to execute every line of code. Imagine a scenario where you have functions that generate complex reports, send notifications, or perform important business logic. A coverage-focused test might call each function in isolation, ensuring that every branch is followed. But if the test never asserts the correctness of the output or checks the side effects, it’s not truly verifying anything valuable.

For example, consider a snippet of code like this:

def calculate_discount(price, customer_type):

if customer_type == "VIP":

return price * 0.8

else:

return priceA “coverage-first” test might look like:

def test_calculate_discount_coverage():

calculate_discount(100, "VIP")

calculate_discount(100, "regular")This test covers both branches of the if statement. On paper, you’ve achieved 100% coverage for calculate_discount(). But notice what’s missing: there’s no verification. The test doesn’t confirm that a VIP gets a 20% discount or that a regular customer pays full price. If the logic was inverted, the test would still pass, and you’d never know.

Why This Is Dangerous

False Confidence: The team celebrates hitting a 95% coverage metric, believing the code is well-tested. Meanwhile, glaring logic errors might remain undiscovered.

Wasted Effort: Writing tests that just execute code without assertions consumes time and resources with no real payoff. Developers could be investing that effort into creating meaningful tests that genuinely improve reliability.

Hard-to-Detect Bugs: If no one is checking the correctness of outcomes, subtle defects can slip through. Business-critical features could fail silently, surfacing only once users start complaining.

A More Meaningful Approach

To ensure your tests are actually useful, shift your mindset:

Focus on Intent, Not Lines: Instead of chasing coverage numbers, start by asking what the code is supposed to do. Write tests that validate outcomes—did the function return the correct value? Did it raise an exception when given invalid input?

Use Assertions Wisely: Assertions are your primary tool. For instance, a better test for the discount function would explicitly check that the VIP discount is applied:

def test_calculate_discount_for_vip():

assert calculate_discount(100, "VIP") == 80

def test_calculate_discount_for_regular():

assert calculate_discount(100, "regular") == 100Now, if someone accidentally breaks the logic, the test will fail, signaling a real issue rather than passing silently.

- Cover Critical Paths, But Verify Them Too: Coverage is helpful to ensure you’re not ignoring important parts of your code. Just make sure that when you do cover a branch, you’re also testing that branch’s behavior. Ensure that the code under test is doing what the business logic demands.

In Conclusion

Test coverage can be a useful metric, but only if we understand its limitations. Having a high coverage number might look good to stakeholders, but it’s not worth much if it doesn’t correlate with quality. Meaningful assertions, tests aligned with business goals, and a focus on verifying outcomes over merely touching lines of code will lead to a healthier, more robust codebase.

Don’t let coverage become a box-ticking exercise. Instead, let it be a springboard to ask more meaningful questions about code correctness and reliability. After all, it’s not about how many lines of code you’ve tested—it’s about how well you’ve tested them.